This is the transcript of a short talk on vintage large language models.

This is the transcript of a short talk on vintage large language models.

A video of the talk is available here. A tweet thread is here and the slide deck is here.

Vintage Large Language Models: Transcript

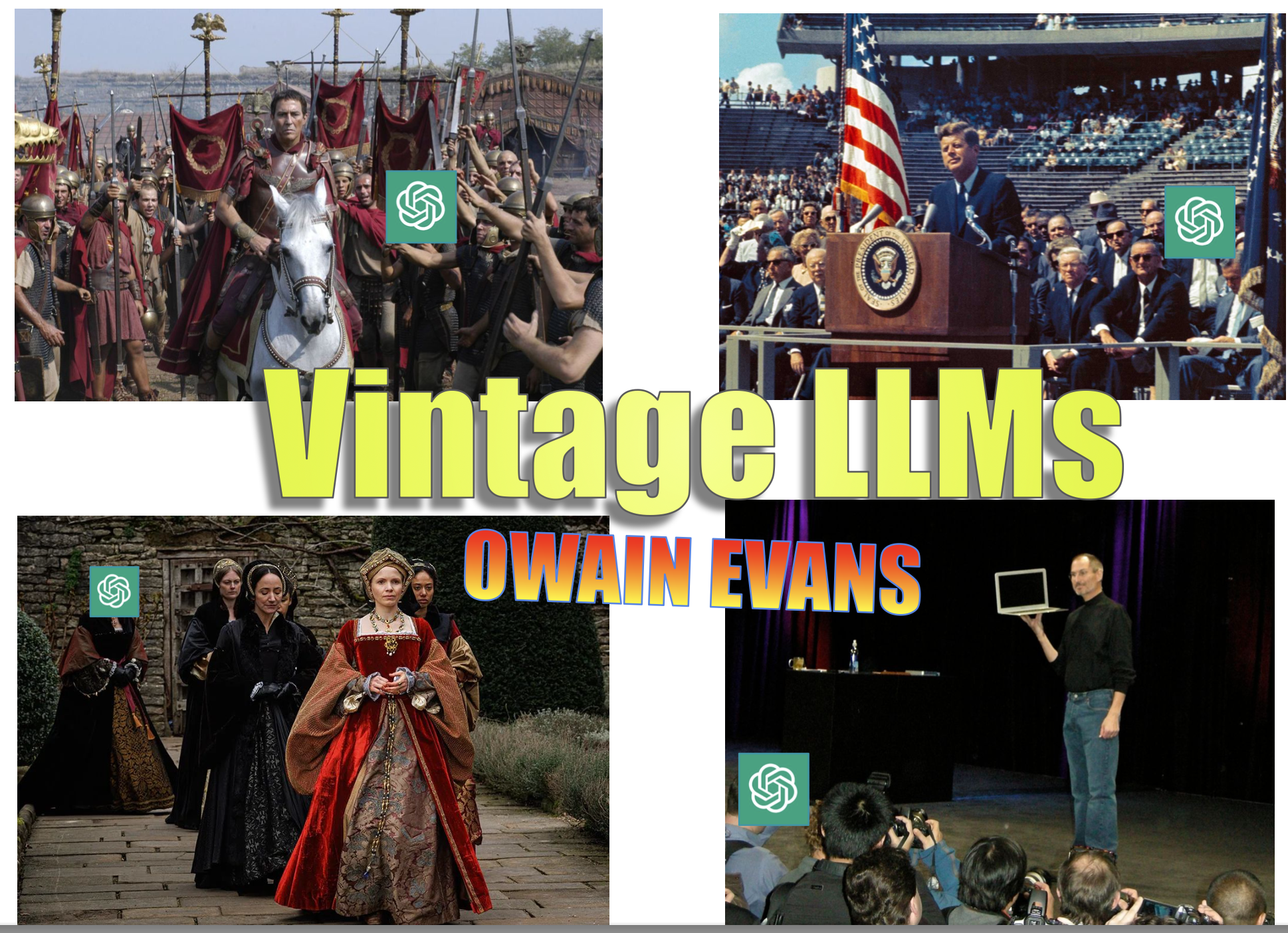

I'm going to talk about vintage large language models. The idea is to introduce language models trained on past data. We are sending language models back into the past - into Roman times, the Tudor era, the 1960s, or more recent decades.

What is a Vintage LLM?

A vintage LLM is a large language model trained on texts and potentially images or other multimodal data up to a particular date. The date could be 2019, so it's trained only on data until 2019 - that's the easier case. It could be up until 1900 or even up until 200 AD, and these historical cases are much more challenging.

One challenge is having enough training data. Another is that the training data needs to be free of contamination. For a model trained up till 1900, there needs to be no information from after 1900 that leaks into the data. Some metadata might have that kind of leakage. While it's not possible to have zero leakage - there's a shadow of the future on past data because what we store is a function of what we care about - it's possible to have a very low level of leakage, sufficient for this to be interesting.

You can include multimodal data like images. There's something strange about including images when going back to Roman times or 1700 because while they had texts, they didn't have digital images. However, this is acceptable for some purposes. You'd want to avoid leaking information that could only be known in the present. You could include things people at the time could see and experience themselves. For example, there may be no anatomically accurate painting in Roman times of a bee or an egg cracking, but you can include such images because people could see such things, even if they weren't part of their recorded media. You could also have pictures of buildings and artifacts that we still have from the past.

Scientific and Epistemic Motivations

One motivation comes from science and epistemics. These applications will become increasingly important. We want to test approaches to using LLMs for prediction and scientific invention. There's various work on using LLMs for forecasting, like Halawi et al. They take an LLM and implement scaffolding with LLM agent-like behavior on top of the fine-tuned LLM. They also use information retrieval and chain of thought prompting. Various reinforcement learning and other techniques could be added to optimize the LLM for forecasting.

We'd like to test how well these approaches work at turning a raw LLM into a good forecaster. We can use a vintage LLM trained up to 2019 (denoted as LLM-2019) and see how well it forecasts up to 2024 through backtesting, familiar from financial modeling. The LLM-2019 wouldn't know about the pandemic, recent wars, or major economic events of the last five years. We can test how well it could predict these things - not just predicting that there would be a pandemic, but once evidence started accumulating, could it predict what would happen next? That first year of the pandemic would be extremely interesting to test forecasting in a novel scenario where human forecasters often made mistakes and predicting the next six months was quite difficult.

Another use of LLMs, which is more in its infancy, is scientific invention. We probably need substantial scaffolding, information retrieval, calling out to external resources for computation, and maybe running experiments. We want to see how well LLMs today could be used for making new inventions, perhaps starting very simple. We could apply this with vintage LLMs by taking an LLM trained up to 1989 and trying to reinvent ideas from the last 35 years - ideas we know to be good, like the web, quantum computing, blockchains, transformers, behavioral economics.

You could go even further back, which becomes really fascinating. You could have an LLM trained up to 1600, before Newton's laws, the theory of evolution, and probability theory, along with an enormous amount of philosophy and science that happened before then. While these concepts may be simpler than what we've invented in the last 35 years, it may be very difficult to create these things given what was known before.

Humanistic Motivations

The first humanistic motivation is time travel. What would it be like to communicate with someone from 1700? This is often depicted in movies or novels, but here you could do this interactively, providing a different and interesting source of evidence. The evidence would be skewed - if we go back to 1700, certain demographic groups will be much better represented in the data through diaries and recorded conversations. It's unclear how well we'll be able to deal with that bias in the data, but it's potentially something you could try to remedy in constructing the training set.

We can optimize this from the present - we know how people talk today and can see how well LLMs simulate that. You could apply the same optimization techniques that work well for present people to simulate conversations with people from the past. You could talk to famous people or the common person. Would they understand you? Would you understand them? You could also have a translator in this endeavor.

There's something interesting about LLMs in that they combine the world's written knowledge into one entity. An LLM can discuss tax law, quantum mechanics, and Haskell all in the same conversation, having knowledge no individual human has. This differs from the past, where there was more of a firewall between civilizations in sharing knowledge. If you go back to 0 AD, 500 AD, 1200, or 1500, you'll have texts in China or India unknown in the West, and vice versa.

You could train on everything, combining Western and Chinese texts in an anachronistic way - no library or scholar knew all these texts. Alternatively, you could train models just on Western texts or just on Chinese texts and examine the effects. What advantages does an LLM with this anachronistic combination have over one with just Western texts? You could survey counterfactual intellectual histories where different strands of knowledge are combined earlier than they did historically.

You could also look at the surprisingness of new ideas. For something like special relativity or Shakespeare's plays, how original were they? How surprising were they? You could prompt the model up until the time these things emerged but before they did and see how it reacts. Can it fill in the blanks given hints toward these insights? How surprising does it find the texts in terms of their predictability?

Epistemic AI and Gold Standards

Epistemic AI has a broad definition: using AI systems to help with epistemics - making beliefs and models of the world more accurate and calibrated. Concrete applications include making more accurate forecasts, surveying scientific literature, combining existing knowledge, and helping humans invent new STEM ideas. LLMs can do this differently from something like AlphaFold because they operate in natural language, writing down scientific laws and reasoning about them logically.

When training an epistemic AI system, you need gold standard examples for training and evaluation. This is crucial. When training a base large language model, you're just training it to imitate text, not to say true things or propose scientific laws. We need gold standard examples that illustrate high-quality behavior for an epistemic AI.

There are three main sources for these examples:

Current humans (RLHF approach) - The AI system outputs a response or proposal, and humans evaluate its quality. This requires humans to judge how good the system's output is, which can be challenging.

Algorithms - Like in AlphaGo, where you know the rules of chess and can compute whether it wins or loses. In RLHF, another model can automate human feedback, with a neural network imitating how humans would respond.

Historical data - This is the approach for vintage LLMs and is also the data source for pre-training, getting the model to predict something that was said in human text.

Challenges in Making Vintage LLMs

There are significant challenges in creating vintage LLMs:

Data Requirements: You need an enormous dataset from the past - a model might need 50 trillion words. This is extremely large, and you need to ensure there's no leakage from the future into this historical data.

Training Costs: Training a state-of-the-art model might cost upwards of $200 million, with next-generation models being even more expensive. While this isn't huge compared to all science funding, it's a significant investment.

Addressing the Challenges

Regarding data, if we go back to 2021, we have most of the high-quality data from 2020. The highest quality data for STEM prediction and forecasting includes scientific papers, key statistics, Wikipedia, and other encyclopedias. We have high-quality economic, meteorological, chemistry, and biological data.

What we have less of for 2021, and especially for 1990, is content like Reddit - random conversations, web pages, and social media. But if you're interested in forecasting and scientific invention, this social media data might not be crucial. Going back to 1990, 1950, or even 1900, we actually have much of the most high-quality data.

One gap is that if you go back far enough, there's probably important engineering and practical understanding that wasn't written down. In 1800, people had extensive practical engineering knowledge, but we likely don't have many manuals recording this practical understanding. We could partially reconstruct this through pictures of machines, tools, artifacts, and buildings from that time.

Synthetic Data Solutions

Progress in synthetic data is potentially crucial here. While we have high-quality data from past decades and years, we don't have the same volume of data in total. Synthetic data could help bridge this gap by using another large language model to generate more training data.

The approach would be to take a real document and use another LLM to create paraphrases, scramblings, or remixes that maintain the same content but vary the phrasing and order. Synthetic data is already being used by AI labs in training - Meta discussed it for their Level 3 training, and we can expect significant progress in this area as it allows for higher quality data without the expense of collecting more.

For vintage LLMs, synthetic data techniques need to be powerful because the need for synthetic data is greater. If you want an LLM from 1900, you gather all available data from that period and then use another LLM to generate variations. To avoid contamination, you could use a bootstrapping approach: train a weaker LLM on clean 1900 information, then use that LLM to generate synthetic data for training an improved 1900 LLM.

Training Cost Solutions

Regarding training costs, one potential approach is chronological training with forking. Instead of training separate models for 2021 and 2024, you could train up to 2021 and then fork the training. One path continues with additional epochs over 2021 data, while another incorporates 2022-2024 data. This saves training costs, though it might result in slightly worse models due to distribution shift.

The value proposition matters too - if a 2021 vintage LLM proves valuable enough, spending hundreds of millions might be justified. The costs might be lower than expected since you've already paid for the compute cluster and developed the expertise.

Advanced Concepts

Some additional ideas worth exploring:

Outsourcing Functions: Vintage LLMs could outsource some functions to current LLMs. Since 2024 LLMs are likely stronger overall, vintage LLMs could call them for certain experiments or reasoning processes, similar to Meta's Toolformer. The key is preventing information leakage from the present.

Compartmentalized LLMs: Train on all data up to 2024, but with clear date annotations on every document. This allows prompting the model for a particular date, getting responses conditioned on that time period without anachronisms. While not a true vintage LLM due to potential contamination, it could complement actual vintage LLMs, especially for outsourcing functions.

These ideas need further development, but they represent interesting directions for exploring this space. The field of vintage LLMs offers exciting possibilities for understanding historical knowledge development and testing AI capabilities in novel ways.